Now I was able to reproduce the error.

Analyzing a series of logs when the memory allocation jump happens I

discovered a pattern: the jump occurs when the query string contains

‘accented characters’. Specially when the query has the U with accents

in the end.

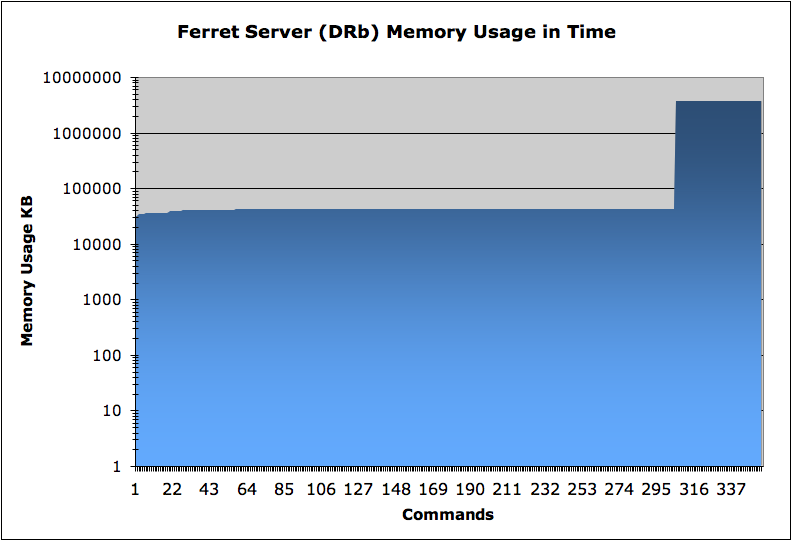

So, after searching for “fas jús”, “videos nús” and others it causes the

allocated memory to instantly jump from ~40MB to ~3.7GB and fail on the

next search with similar queries.

Also, I noted that on the ferret_server.log que query appears sometimes

different with extras chars on its ends, see:

I searched for “fas jús” and the log showed this: call index method:

find_id_by_contents with ["faz j?s ",

{:sort=>[posted_at_sort:!], :offset=>0, :limit=>10}] - server

memory: 36288 KB

In the next call we could see that the memory jump just happened: call

index method: find_id_by_contents with [“PORTA ABERTA”,

{:sort=>[posted_at_sort:!], :offset=>0, :limit=>10}] - server

memory: 3720856 KB

Again, when searching for “videos manús” the log shows “video man?s(?”

(not the garbage in the end). And the memory jumped to 3.7GB.

Then searching for “urubús” the log shows "urub?s " (note the extra

spaces) and the server fails with the FATAL error.

Once I could also got this message on the DRb server:

/opt/csw/lib/ruby/1.8/drb/drb.rb:941: warning: setvbuf() can’t be

honoured (fd=37)

Finally a sequence alternating the accented char to á, é, Ã, ó and ú to

see if the same happens with other chars. But it only happened with the

U accented and not the other chars, see:

[Sun Apr 08 17:26:09 BRT 2007] Info > Starting…

QUERY “deverás” > call index method: find_id_by_contents with

[“dever?s”, {:sort=>[posted_at_sort:!], :offset=>0,

:limit=>10}] - server memory: 30744 KB

QUERY “deverés” > call index method: find_id_by_contents with

[“dever?s”, {:sort=>[posted_at_sort:!], :offset=>0,

:limit=>10}] - server memory: 34168 KB

QUERY “deverÃs” > call index method: find_id_by_contents with

[“dever?s”, {:sort=>[posted_at_sort:!], :offset=>0,

:limit=>10}] - server memory: 34956 KB

QUERY “deveros” > call index method: find_id_by_contents with [“dever?”,

{:sort=>[posted_at_sort:!], :offset=>0, :limit=>10}] - server

memory: 35156 KB

QUERY “deverús” > call index method: find_id_by_contents with ["dever?s

", {:sort=>[posted_at_sort:!], :offset=>0, :limit=>10}] -

server memory: 35696 KB ===> MEMORY JUMP & GARBAGE ON LOG!!!

QUERY “jump”> call index method: find_id_by_contents with [“jump”,

{:sort=>[posted_at_sort:!], :offset=>0, :limit=>10}] - server

memory: 3724812 KB <<< THIS IS THE MEMORY AFTER THE “deverús” QUERY!!!